XtreemFS Installation and User Guide

The XtreemFS Installation and User Guide

Version 1.5.1

XtreemFS is available from the XtreemFS website (www.XtreemFS.org).

This document is © 2009-2015 by Björn Kolbeck, Jan Stender, Michael Berlin, Christoph Kleineweber, Matthias Noack, Paul Seiferth, Felix Langner, NEC HPC Europe, Felix Hupfeld, Juan Gonzales, Patrick Schäfer, Lukas Kairies, Jens V. Fischer, Johannes Dillmann, Robert Schmidtke. All rights reserved.

Contents

1 Quick Start

2 About XtreemFS

2.1 What is XtreemFS?

2.2 Is XtreemFS suitable for me?

2.3 Core Features

2.4 Architecture

3 XtreemFS Services

3.1 Installation

3.1.1 Prerequisites

3.1.2 Installing from Pre-Packaged Releases

3.1.3 Installing from Sources

3.2 Configuration

3.2.1 A Word about UUIDs

3.2.2 Configuration for mixed IPv4 / IPv6 networks and NATs

3.2.3 Automatic DIR Discovery

3.2.4 Authentication

3.2.5 Configuring SSL Support

3.2.6 Running XtreemFS on SSDs

3.2.7 List of Configuration Options

3.3 Execution and Monitoring

3.3.1 Starting and Stopping the XtreemFS services

3.3.2 Running multiple OSDs per Machine

3.3.3 Monitoring OSD's storage devices

3.3.4 Web-based Status Page

3.3.5 DIR Service Monitoring

3.3.6 Monitoring Services with SNMP

3.3.7 Monitoring with Ganglia

3.4 Troubleshooting

4 XtreemFS Client

4.1 Installation

4.1.1 Prerequisites

4.1.2 Installing from Pre-Packaged Releases

4.1.3 Installing from Sources

4.2 Volume Management

4.2.1 Creating Volumes

4.2.2 Deleting Volumes

4.2.3 Listing all Volumes

4.3 Accessing Volumes

4.3.1 Mounting and Un-mounting

4.3.2 Mount Options

4.3.3 /etc/fstab Integration

4.3.4 Asynchronous Writes Support

4.3.5 Object Cache

4.4 Troubleshooting

5 XtreemFS Tools

5.1 Installation

5.1.1 Prerequisites

5.1.2 Installing from Pre-Packaged Releases

5.1.3 Installing from Sources

5.2 Admin Tools

5.2.1 MRC Database Backups and Conversion

5.2.2 Scrubbing and Cleanup

5.2.3 Setting the OSD Status

5.2.4 Draining OSDs

5.3 User Tools

5.3.1 xtfsutil for Files

5.3.2 xtfsutil for Volumes

5.3.3 Changing OSD and Replica Selection Policies

5.3.4 Setting and Listing Policy Attributes

5.3.5 Modifying Access Control Lists

5.3.6 Snapshots

5.4 Test Tools

6 Replication

6.1 Read/Write File Replication

6.1.1 Technical Details

6.1.2 Limitations

6.1.3 Setup

6.2 Read-Only File Replication

6.2.1 Limitations

6.2.2 Setup

6.3 MRC and DIR Replication

6.3.1 Technical Details

6.3.2 Setup

7 Policies

7.1 Authentication Policies

7.1.1 UNIX uid/gid - NullAuthProvider

7.1.2 Plain SSL Certificates - SimpleX509AuthProvider

7.2 Authorization Policies

7.3 OSD and Replica Selection Policies

7.3.1 Attributes

7.3.2 Predefined Policies

7.3.3 OSD Selection based on Custom Attributes

7.3.4 Vivaldi

7.4 Striping Policies

7.5 Plug-in Policies

A Support

B Hadoop Integration

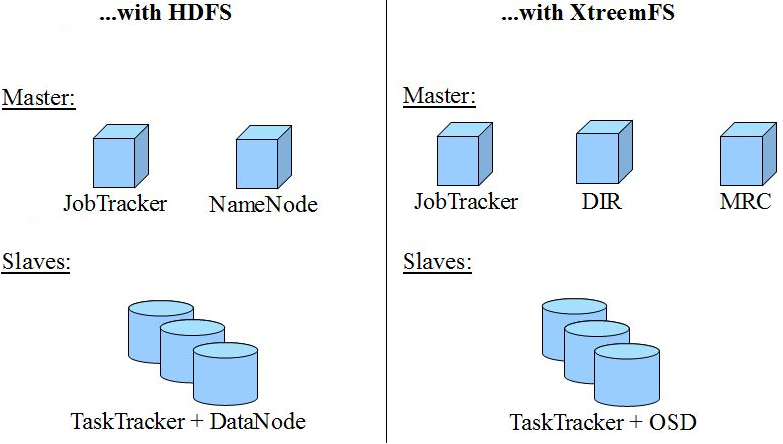

B.1 Introduction

B.2 Quick Start

C Client Library libxtreemfs

C.1 General Interface

C.2 Using the C++ libxtreemfs

C.3 Using the libxtreemfs for Java

D Command Line Utilities

Index

Changes

Summary of important changes in release 1.5.1:

- Improved Hadoop support

Hadoop Adapter supports Hadoop-2.x and other applications running on the

YARN platform.

- Consistent adding and removing replicas for R/W replication

Replica consistency is ensured while adding and removing replicas,

xtfs_scrub can replace failed replicas automatically.

- Improved SSL mode

The used SSL/TLS version is selectable, strict certificate chain checks

are possible, the SSL code on client and server side was improved.

- Better support for mounting XtreemFS using /etc/fstab

All mount parameters can be passed to the client by mount.xtreemfs -o

option=value.

- Initial version of an LD_PRELOAD based client

The client comes in the form of a library that can be linked to an

application via LD_PRELOAD. File system calls to XtreemFS are directly

forwarded to the services without FUSE. The client is intended for systems

without FUSE or performance critical applications (experimental).

- The size of a volume can be limited

Added quota support on volume level. The capacity limits are currently

checked while opening a file on the MRC.

- Minor bugfixes and improvements across all components.

See the CHANGELOG for more details and references to the issue numbers.

- OSD health monitoring

OSDs can report their health, e.g. determined by SMART values to the DIR.

The results are aggregated in the DIR web interface. The default OSD

selection policy can skip unhealthy OSDs.

Summary of important changes in release 1.5:

- Improved Hadoop support

Added support for multiple volumes and read and write buffer to speed up performance. See section B.1.

- SSDs support

Support for multiple OSD storage threads to increase parallelism. See section 3.2.6.

- Replication Status Page

Status webpage as part of the DIR webinterface for replicated files to show current primary and backup replicas.

- Multi-Homing Support

XtreemFS can now be made available for multiple networks and clients will pick the correct address automatically. See option .

- Multiple OSDs per Machine

Support for multiple OSDs per machine (e.g. one for each disk) through the xtreemfs-osd-farm init.d script. See section 3.3.2.

- Improved Checksum Support

xtfs_scrub now repairs replicas with an invalid checksum automatically.

- Major bugfixes for Read/Write and Read-Only Replication

There were problems with the fail-over of replicas which were fixed.

- Minor bugfixes and improvements across all components.

See the CHANGELOG for more details and references to the issue numbers.

Summary of important changes in release 1.4:

- Improved Stability

Fixed client crashes when timeouts occurred. Fixed server crashes and issues with the R/W replication.

- Full support for asynchronous writes

If enabled, write() requests will be immediately acknowledged by the client and executed in the background. Outstanding writes are always flushed at close() or fsync*() events. This improves the write throughput, especially in case of connections with high latency. See section 4.3.4 for more information.

- Windows Client (Beta)

Rewrite of Windows Client (based on C++ libxtreemfs) which uses Eldos' Callback File System product instead of Dokan.

- Re-wrote HDFS interface implementation

Use XtreemFS as replacement for HDFS in your Hadoop setup. See section B.1 how to use it.

- libxtreemfs for Java

Access XtreemFS directly from your Java application. See section C.3 how to use it.

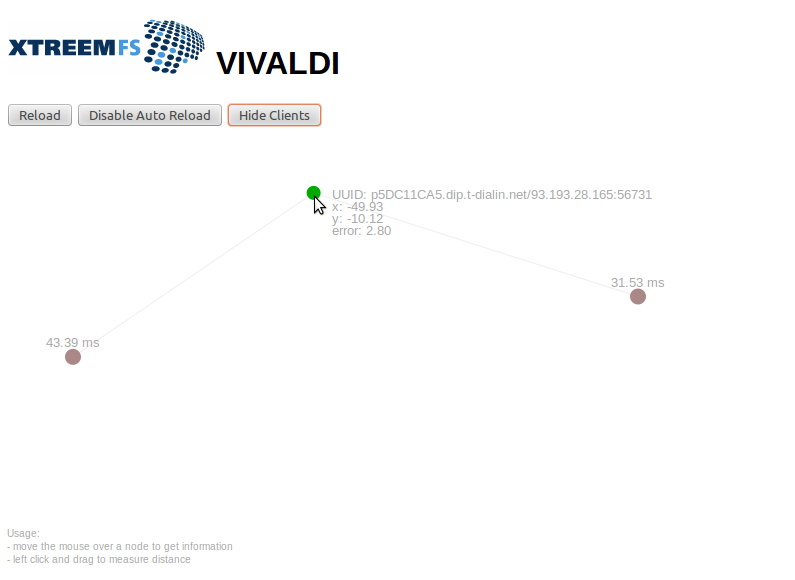

- Re-added and improved Vivaldi support

Vivaldi is directly integrated in the client now and there's a visualization of the coordinates available, even of the client coordinates. See section 7.3.4.

- Support for custom attributes in the OSD Selection policies

Added support for OSD Selection based on Custom Attributes e.g., assign a country code to every OSD as custom attribute and limit the placement of files on OSDs based on the attribute (see 7.3.3).

Summary of important changes in release 1.3:

- new client

We have re-written the client from scratch. The new client supports automatic fail-over for replicated files and metadata caching.

- libxtreemfs

libxtreemfs is a convenient C++ library to use XtreemFS directly without a mounted client or the VFS layer. The new client is built on top of this library. A java version of libxtreemfs is planned.

- File system snapshots

XtreemFS now supports snapshots. A snapshot reflects a momentary state of a volume or directory. It can be mounted and read-only accessed.

- Full file replication

Starting with this release, XtreemFS supports full file replication. Read/write replicated files offer regular file system semantics and work with all applications.

- DIR, MRC replication

The DIR and MRC can now be replicated using the BabuDB database replication. The replication works with a primary and backups. If the primary fails, a backup will automatically take over after a short time.

- xtfsutil

We have replaced all user tools with a single binary. The new tool doesn't require java anymore.

- OSD drain

With OSD drain, files can be removed from an OSD without interrupting the system. A fully drained OSD can be removed from the system without data loss.

Summary of important changes in release 1.2.1:

- server status

Each server (especially OSDs) have a persistent status which can be online or dead/removed. This status must be changed manually and is used by the scrubber tool to identify dead OSDs which have been removed from the system.

- enhanced scrubber

The scrubber is now able to remove replicas which are stored on OSDs that are marked as dead/removed. The scrubber will create new replicas for that file if a complete replica still exists and a sufficient number of OSDs is available. In addition, the scrubber marks replicas as "complete" if they contain all objects of the original file.

This is a summary of the most important changes in release 1.2:

- renamed binaries

We renamed most binaries to conform with Linux naming conventions, e.g. xtfs_mount is now mount.xtreemfs. However, we added links with the old names for compatibility. For a full list see Sec. D.

- "Grid SSL" mode

In this mode, SSL is only used for authentication (handshake) and regular TCP is used for communication afterwards. For more details see Sec. 3.2.5.

- the xctl utility

The new release includes a command line utility xctl for starting and stopping the services. This tool is useful if you don't want a package based installation or if you don't have root privileges.

- vivaldi

XtreemFS now includes modules for calculating Vivaldi network coordinates to reflect the latency between OSDs and clients. An OSD and replica selection policy for vivaldi is also available. For details, see Sec. 7.3.4.

Chapter 1

Quick Start

This is the very short version to help you set up a local installation of XtreemFS.

- Download XtreemFS RPMs/DEBs and install

- Download the RPMs or DEBs for your system from the XtreemFS website (http://www.xtreemfs.org)

- open a root console (su or sudo)

- install with rpm -Uhv xtreemfs*-1.3.x.rpm

- Start the Directory Service:

/etc/init.d/xtreemfs-dir start

- Start the Metadata Server:

/etc/init.d/xtreemfs-mrc start

- Start the OSD:

/etc/init.d/xtreemfs-osd start

- If not already loaded, load the FUSE kernel module:

modprobe fuse

- Depending on your distribution, you may have to add users to a special group to allow them to mount FUSE file systems. In openSUSE users must be in the group trusted, in Ubuntu in the group fuse. You may need to log out and log in again for the new group membership to become effective.

- You can now close the root console and work as a regular user.

- Wait a few seconds for the services to register at the directory service. You can check the registry by opening the DIR status page in your favorite web browser http://localhost:30638.

- Create a new volume with the default settings:

mkfs.xtreemfs localhost/myVolume

- Create a mount point:

mkdir ~/xtreemfs

- Mount XtreemFS on your computer:

mount.xtreemfs localhost/myVolume ~/xtreemfs

- Have fun ;-)

- To un-mount XtreemFS:

umount.xtreemfs ~/xtreemfs

You can also mount this volume on remote computers. First make sure that the ports 32636, 32638 and 32640 are open for incoming TCP connections. You must also specify a hostname that can be resolved by the remote machine! This hostname has to be used instead of localhost when mounting.

Chapter 2

About XtreemFS

Since you decided to take a look at this user guide, you probably read or heard about XtreemFS and want to find out more. This chapter contains basic information about the characteristics and the architecture of XtreemFS.

2.1 What is XtreemFS?

XtreemFS is a file system for a variety of different use cases and purposes. Since it is impossible to categorize or explain XtreemFS in a single sentence, we introduce XtreemFS by means of its two most significant properties: XtreemFS is a globally distributed and replicated file system.

What makes XtreemFS a distributed file system? We consider a file system as distributed if files are stored across a number of servers rather than a single server or local machine. Unlike local or network file systems, a distributed file system aggregates the capacity of multiple servers. As a globally distributed file system, XtreemFS servers may be dispersed all over the world. The capacity can be increased and decreased by adding and removing servers, but from a user's perspective, the file system appears to reside on a single machine.

What makes XtreemFS a replicated file system? We call it a replicated file system because replication is one of its most prominent features. XtreemFS is capable of maintaining replicas of files on different servers. Thus, files remain accessible even if single servers, hard disks or network connections fail. Besides, replication yields benefits in terms of data rates and access times. Different replicas of a file can be accessed simultaneously on different servers, which may lead to a better performance compared to simultaneous accesses on a single server. By placing file replicas close the consuming users and applications in a globally distributed installation, the effects of network latency and bandwidth reduction in wide area networks can be mitigated. However, replication is transparent to users and applications that work with XtreemFS; the file system is capable of controlling the life cycle and access of replicas without the need for human intervention or modifications of existing applications.

2.2 Is XtreemFS suitable for me?

If you consider using XtreemFS, you may be a system administrator in search of a better and more flexible alternative to your current data management solution. Or you may be a private user in need of a file system that can be easily set up and accessed from any machine in the world. You might also be someone looking for an open-source solution to manage large amounts of data distributed across multiple sites. In any case, you will wonder if XtreemFS fulfills your requirements. As a basis for your decision, the following two paragraphs point out the characteristics of XtreemFS.

XtreemFS is ...

... an open source file system. It is distributed freely and can be used by anyone without limitations.

... a POSIX file system. Users can mount and access XtreemFS like any other common file system. Application can access XtreemFS via the standard file system interface, i.e. without having to be rebuilt against a specialized API. XtreemFS supports a POSIX-compliant access control model.

... a multi-platform file system. Server and client modules can be installed and run on different platforms, including most Linux distributions, Solaris, Mac OS X and Windows.

... a globally distributed file system. Unlike cluster file systems, an XtreemFS installation is not restricted to a single administrative domain or cluster. It can span the globe and may comprise servers in different administrative domains.

... a failure-tolerant file system. As stated in the previous section, replication can keep the system alive and the data safe. In this respect, XtreemFS differs from most other open-source file systems.

... a secure file system. To ensure security in an untrusted, worldwide network, all network traffic can be encrypted with SSL connections, and users can be authenticated with X.509 certificates.

... a customizable file system. Since XtreemFS can be used in different environments, we consider it necessary to give administrators the possibility of adapting XtreemFS to the specific needs of their users. Customizable policies make it possible change the behavior of XtreemFS in terms of authentication, access control, striping, replica placement, replica selection and others. Such policies can be selected from a set of predefined policies, or implemented by administrators and plugged in the system.

XtreemFS is not ...

... a high-performance cluster file system. Even though XtreemFS reaches acceptable throughput rates on a local cluster, it cannot compete with specialized cluster file systems in terms of raw performance numbers. Most such file systems have an optimized network stack and protocols, and a substantially larger development team. If you have huge amounts of data on a local cluster with little requirements but high throughput rates to them, a cluster file system is probably the better alternative.

... a replacement for a local file system. Even though XtreemFS can be set up and mounted on a single machine, the additional software stack degrades the performance, which makes XtreemFS a bad alternative.

2.3 Core Features

The core functionality of XtreemFS is characterized by a small set of features, which are explained in the following.

Distribution.

An XtreemFS installation comprises multiple servers that may run on different nodes connected on a local cluster or via the Internet. Provided that the servers are reachable, a client module installed on any machine in the world can access the installation. A binary communication protocol based on Google's Protocol Buffers ensures an efficient communication with little overhead between clients and servers. XtreemFS ensures that the file system remains in a consistent state even if multiple clients access a common set of files and directories. Similar to NFS, it offers a close-to-open consistency model in the event of concurrent file accesses.

Replication.

Starting with release 1.3, XtreemFS supports the replication of mutable files as well as a replicated Directory Service (DIR) and Metadata Catalog (MRC). All components in XtreemFS can be replicated for redundancy which results in a fully fault-tolerant file system. The replication in XtreemFS works with hot backups, which automatically take over if the primary replica fails.

Since version 1.0, XtreemFS supports read-only replication. A file may have multiple replicas, provided that the it was explicitly made read-only before, which means that its content cannot be changed anymore. This kind of replication can be used to make write-once files available to many consumers, or to protect them from losses due to hardware failures. Besides complete replicas that are immediately synchronized after having been created, XtreemFS also supports partial replicas that are only filled with content on demand. They can e.g. be used to make large files accessible to many clients, of which only parts need to be accessed.

Striping.

To ensure acceptable I/O throughput rates when accessing large files, XtreemFS supports striping. A striped file is split into multiple chunks ("stripes"), which are stored on different storage servers. Since different stripes can be accessed in parallel, the whole file can be read or written with the aggregated network and storage bandwidth of multiple servers. XtreemFS currently supports the RAID0 striping pattern, which splits a file up in a set of stripes of a fixed size, and distributes them across a set of storage servers in a round-robin fashion. The size of an individual stripe as well as the number of storage servers used can be configured on a per-file or per-directory basis.

Security.

To enforce security, XtreemFS offers mechanisms for user authentication and authorization, as well as the possibility to encrypt network traffic.

Authentication describes the process of verifying a user's or client's identity. By default, authentication in XtreemFS is based on local user names and depends on the trustworthiness of clients and networks. In case a more secure solution is needed, X.509 certificates can be used.

Authorization describes the process of checking user permissions to execute an operation. XtreemFS supports the standard UNIX permission model, which allows for assigning individual access rights to file owners, owning groups and other users.

Authentication and authorization are policy-based, which means that different models and mechanisms can be used to authenticate and authorize users. Besides, the policies are pluggable, i.e. they can be freely defined and easily extended.

XtreemFS uses unauthenticated and unencrypted TCP connections by default. To encrypt all network traffic, services and clients can establish SSL connections. However, using SSL requires that all users and services have valid X.509 certificates.

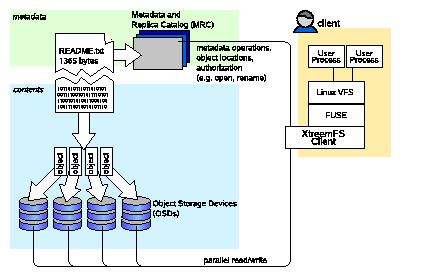

2.4 Architecture

XtreemFS implements an object-based file system architecture (Fig. 2.1): file content is split into a series of fixed-size objects and stored across storage servers, while metadata is stored on a separate metadata server. The metadata server organizes file system metadata as a set of volumes, each of which implements a separate file system namespace in the form of a directory tree.

In contrast to block-based file systems, the management of available and used storage space is offloaded from the metadata server to the storage servers. Rather than inode lists with block addresses, file metadata contains lists of storage servers responsible for the objects, together with striping policies that define how to translate between byte offsets and object IDs. This implies that object sizes may vary from file to file.

Figure 2.1: The XtreemFS architecture and components.

XtreemFS Components.

An XtreemFS installation contains three types of servers that can run on one or several machines (Fig. 2.1):

- DIR - Directory Service

The directory service is the central registry for all services in XtreemFS. The MRC uses it to discover storage servers.

- MRC - Metadata and Replica Catalog

The MRC stores the directory tree and file metadata such as file name, size or modification time. Moreover, the MRC authenticates users and authorizes access to files.

- OSD - Object Storage Device

An OSD stores arbitrary objects of files; clients read and write file data on OSDs.

These servers are connected by the client to a file system. A client mounts one of the volumes of the MRC in a local directory. It translates file system calls into RPCs sent to the respective servers.

The client is implemented as a FUSE user-level driver that runs as a normal process. FUSE itself is a kernel-userland hybrid that connects the user-land driver to Linux' Virtual File System (VFS) layer where file system drivers usually live.

Chapter 3

XtreemFS Services

This chapter describes how to install and set up the server side of an XtreemFS installation.

3.1 Installation

When installing XtreemFS server components, you can choose from two different installation sources: you can download one of the pre-packaged releases that we create for most Linux distributions or you can install directly from the source tarball.

Note that the source tarball contains the complete distribution of XtreemFS, which also includes client and tools. Currently, binary distributions of the server are only available for Linux.

3.1.1 Prerequisites

For the pre-packaged release, you will need Sun Java JRE 1.6.0 or newer to be installed on the system.

When building XtreemFS directly from the source, you need a Sun Java JDK 1.6.0 or newer, Ant 1.6.5 or newer and gmake.

3.1.2 Installing from Pre-Packaged Releases

On RPM-based distributions (RedHat, Fedora, SuSE, Mandriva) you can install the package with

$> rpm -i xtreemfs-server-1.3.x.rpm xtreemfs-backend-1.3.x.rpm

For Debian-based distributions, please use the .deb package provided and install it with

$> dpkg -i xtreemfs-server-1.3.x.deb xtreemfs-backend-1.3.x.deb

To install the server components, the following package is required: jre ≥ 1.6.0 for RPM-based releases, java6-runtime for Debian-based releases. If you already have a different distribution of Java6 on your system, you can alternatively install the XtreemFS server packages as follows:

$> rpm -i --nodeps xtreemfs-server-1.3.x.rpm \

xtreemfs-backend-1.3.x.rpm

on RPM-based distributions,

$> dpkg -i --ignore-depends java6-runtime \

xtreemfs-server-1.3.x.deb xtreemfs-backend-1.3.x.deb

on Debian-based distributions.

To ensure that your local Java6 installation is used, is necessary to set the JAVA_HOME environment variable to your Java6 installation directory, e.g.

$> export JAVA_HOME=/usr/java6

Both RPM and Debian-based packages will install three init.d scripts to start up the services (xtreemfs-dir, xtreemfs-mrc, xtreemfs-osd). If you want the services to be started automatically when booting up the system, you can execute insserv <init.d script> (SuSE), chkconfig --add <init.d script> (Mandriva, RedHat) or update-rc.d <init.d script> defaults (Ubuntu, Debian).

3.1.3 Installing from Sources

Extract the tarball with the sources. Change to the top level directory and execute

$> make server

This will build the XtreemFS server and Java-based tools. When done, execute

$> sudo make install-server

to install the server components. Finally, you will be asked to execute a post-installation script

$> sudo /etc/xos/xtreemfs/postinstall_setup.sh

to complete the installation.

3.2 Configuration

After having installed the XtreemFS server components, it is recommendable to configure the different services. This section describes the different configuration options.

XtreemFS services are configured via Java properties files that can be modified with a normal text editor. Default configuration files for a Directory Service, MRC and OSD are located in /etc/xos/xtreemfs/.

3.2.1 A Word about UUIDs

XtreemFS uses UUIDs (Universally Unique Identifiers) to be able to identify services and their associated state independently from the machine they are installed on. This implies that you cannot change the UUID of an MRC or OSD after it has been used for the first time!

The Directory Service resolves UUIDs to service endpoints, where each service endpoint consists of an IP address or hostname and port number. Each endpoint is associated with a netmask that indicates the subnet in which the mapping is valid. If is enabled, multiple endpoints with different netmasks are assigned to a single UUID. In addition one default mapping, which is valid in all networks with the netmask "*", will be assigned. By default this will be the first available network device with a public address.

Changes to the IP address, hostname or port are possible at any time, but have to be propagated to the Directory Service by a . Due to the caching of UUIDs in all components, it can take some time until the new UUID mapping is used by all OSDs, MRCs and clients. The TTL (time-to-live) of a mapping defines how long an XtreemFS component is allowed to keep entries cached. The default value is 3600 seconds (1 hour). It should be set to shorter durations if services change their IP address frequently.

To create a globally unique UUID you can use tools like uuidgen. During installation, the post-install script will automatically create a UUID for each OSD and MRC if it does not have a UUID assigned.

3.2.2 Configuration for mixed IPv4 / IPv6 networks and NATs

XtreemFS is IPv6 ready, but requires a hostname based configuration for mixed networks due to the way UUIDs are resolved. If no hostname is configured, the default endpoint will be set to the first available network device with a public address. If this is an IPv6 address, clients or services in IPv4 networks won't be able to access the service with the resolved UUID. Additional IPv4 endpoints may be available, but will be bound to a specific netmask.

To enable mixed networks each service has to be configured with a , which is resolvable to the IPv4 address as well as to the IPv6 address by clients and other services. This can be achieved by using the Domain Name System and setting the A and AAAA records for each hostname.

Analogous problems occur in systems with NAT (network address translation) like on Amazon Virtual Private Cloud or OpenStack. By default each service will register its local address which is unreachable from other networks. To enable services with NAT you can add the external address to the internal network device with a corresponding route and use the DNS to resolve hostnames as described above. To ensure services from the same network are using a direct route it is advised to enable .

3.2.3 Automatic DIR Discovery

OSDs and MRCs are capable of automatically discovering a Directory Service. If automatic DIR discovery is switched on, the service will broadcast requests to the local LAN and wait up to 10s for a response from a DIR. The services will select the first DIR which responded, which can lead to non-deterministic behavior if multiple DIR services are present. Note that the feature works only in a local LAN environment, as broadcast messages are not routed to other networks. Local firewalls on the computers on which the services are running can also prevent the automatic discovery from working.

Security: The automatic discovery is a potential security risk when used in untrusted environments as any user can start-up DIR services.

A statically configured DIR address and port can be used to disable DIR discovery in the OSD and MRC (see Sec. 3.2.7, dir_service). By default. the DIR responds to UDP broadcasts. To disable this feature, set discover = false in the DIR service config file.

3.2.4 Authentication

Administrators may choose the way of authenticating users in XtreemFS. Authentication Providers are pluggable modules that determine how users are authenticated. For further details, see Sec. 7.1.

To set the authentication provider, it is necessary to set the following property in the MRC configuration file:

authentication_provider = <classname>

By default, the following class names can be used:

- org.xtreemfs.common.auth.NullAuthProvider

uses local user and group IDs

- org.xtreemfs.common.auth.SimpleX509AuthProvider

uses X.509 certificates; user and group IDs are extracted from the distinguished names of the certificates

3.2.5 Configuring SSL Support

In order to enable certificate-based authentication in an XtreemFS installation, services need to be equipped with X.509 certificates. Certificates are used to establish a mutual trust relationship among XtreemFS services and between the XtreemFS client and XtreemFS services.

Note that it is not possible to mix SSL-enabled and non-SSL services in an XtreemFS installation! If you only need authentication based on certificates without SSL, you can use the "grid SSL" mode. In this mode XtreemFS will only do an SSL handshake and fall back to plain TCP for communication. This mode is insecure (not encrypted and records are not signed) but just as fast as the non-SSL mode. If this mode is enabled, all client tools must be used with the pbrpcg:// scheme prefix.

Each XtreemFS service needs a certificate and a private key in order to be run. Once they have been created and signed, the credentials may need to be converted into the correct file format. XtreemFS services also need a trust store that contains all trusted Certification Authority certificates.

By default, certificates and credentials for XtreemFS services are stored in

/etc/xos/xtreemfs/truststore/certs

Converting PEM files to PKCS#12

The simplest way to provide the credentials to the services is by converting your signed certificate and private key into a PKCS#12 file using openssl:

$> openssl pkcs12 -export -in ds.pem -inkey ds.key \

-out ds.p12 -name "DS"

$> openssl pkcs12 -export -in mrc.pem -inkey mrc.key \

-out mrc.p12 -name "MRC"

$> openssl pkcs12 -export -in osd.pem -inkey osd.key \

-out osd.p12 -name "OSD"

This will create three PKCS12 files (ds.p12, mrc.p12 and osd.p12), each containing the private key and certificate for the respective service. The passwords chosen when asked must be set as a property in the corresponding service configuration file.

Importing trusted certificates from PEM into a JKS

The certificate (or multiple certificates) from your CA (or CAs) can be imported into a Java Keystore (JKS) using the Java keytool which comes with the Java JDK or JRE.

Execute the following steps for each CA certificate using the same keystore file.

$> keytool -import -alias rootca -keystore trusted.jks \

-trustcacerts -file ca-cert.pem

This will create a new Java Keystore trusted.jks with the CA certificate in the current working directory. The password chosen when asked must be set as a property in the service configuration files.

Note: If you get the following error

keytool error: java.lang.Exception: Input not an X.509 certificate

you should remove any text from the beginning of the certificate (until the --BEGIN CERTIFICATE-- line).

Sample Setup

Users can easily set up their own CA (certificate authority) and create and sign certificates using openssl for a test setup.

- Set up your test CA.

- Create a directory for your CA files

$> mkdir ca

- Create a private key and certificate request for your CA.

$> openssl req -new -newkey rsa:1024 -nodes -out ca/ca.csr \

-keyout ca/ca.key

Enter something like XtreemFS-DEMO-CA as the common name (or something else, but make sure the name is different from the server and client name!).

- Create a self-signed certificate for your CA which is valid for one year.

$> openssl x509 -trustout -signkey ca/ca.key -days 365 -req \

-in ca/ca.csr -out ca/ca.pem

- Create a file with the CA's serial number

$> echo "02" > ca/ca.srl

- Set up the certificates for the services and the XtreemFS Client.

Replace SERVICE with dir, mrc, osd and client.

- Create a private key for the service.

Use XtreemFS-DEMO-SERVICE as the common name for the certificate.

$> openssl req -new -newkey rsa:1024 -nodes \

-out SERVICE.req \

-keyout SERVICE.key

- Sign the certificate with your demo CA.

The certificate is valid for one year.

$> openssl x509 -CA ca/ca.pem -CAkey ca/ca.key \

-CAserial ca/ca.srl -req \

-in SERVICE.req \

-out SERVICE.pem -days 365

- Export the service credentials (certificate and private key) as a PKCS#12 file.

For the dir, mrc and osd services, use "passphrase" as export password.

$> openssl pkcs12 -export -in SERVICE.pem -inkey SERVICE.key \

-out SERVICE.p12 -name "SERVICE"

For the XtreemFS Client, leave the export password empty to avoid being asked for the password on mount. If you do not want to/cannot distribute the demo CA to the clients' trusted root CA paths (e.g. on Linux machines), also include your demo CA in the PKCS#12 file so the server certificates can be verified.

$> openssl pkcs12 -export -in client.pem -inkey client.key \

-out client_ca.p12 -name "client_ca" -certfile ca.pem

Multiple CA's certificates (e.g. for using a certificate chain) can be specified by concatenating them and storing them in a new file which can then be used as argument to the -certfile option.

- Copy the PKCS#12 file to the certificates directory.

$> mkdir -p /etc/xos/xtreemfs/truststore/certs

$> cp SERVICE.p12 /etc/xos/xtreemfs/truststore/certs

- Export your CA's certificate to the trust store and copy it to the certificate dir.

You should answer "yes" when asked "Trust this certificate".

Use "jks_passphrase" as passphrase for the keystore.

$> keytool -import -alias ca -keystore trusted.jks \

-trustcacerts -file ca/ca.pem

$> cp trusted.jks /etc/xos/xtreemfs/truststore/certs

It is sufficient to only trust the CA's certificates which have signed the other services' (and clients') certificates, instead of trusting the entire chain. For example, if you signed MRC.pem with ca.pem, which in turn was signed with some_ca.pem, you do not need to import some_ca.pem into the keystore.

- Configure the services.

Edit the configuration file for all your services. Set the following configuration options (see Sec. 3.2 for details).

ssl.enabled = true

ssl.service_creds.pw = passphrase

ssl.service_creds.container = pkcs12

ssl.service_creds = /etc/xos/xtreemfs/truststore/certs/service.p12

ssl.trusted_certs = /etc/xos/xtreemfs/truststore/certs/trusted.jks

ssl.trusted_certs.pw = jks_passphrase

ssl.trusted_certs.container = jks

- Start up the XtreemFS services (see Sec. 3.3.1).

- Create a new volume (see Sec. 4.2.1 for details).

Use

$> mkfs.xtreemfs --pkcs12-file-path=\

/etc/xos/xtreemfs/truststore/certs/client_ca.p12 pbrpcs://localhost/test

for SSL-enabled servers, or

$> mkfs.xtreemfs --pkcs12-file-path=\

/etc/xos/xtreemfs/truststore/certs/client_ca.p12 pbrpcg://localhost/test

for Grid-SSL-enabled servers.

- Mount the volume (see Sec. 4.3 for details).

Use

$> mount.xtreemfs --pkcs12-file-path=\

/etc/xos/xtreemfs/truststore/certs/client_ca.p12 pbrpcs://localhost/test /mnt

for SSL-enabled servers, or

$> mount.xtreemfs --pkcs12-file-path=\

/etc/xos/xtreemfs/truststore/certs/client_ca.p12 pbrpcg://localhost/test /mnt

for Grid-SSL-enabled servers.

Setting passphrases in command line tools

There are two options to set the passhrase for the trusted certificates and the keystore in command line tools.

- Set the passphrase with the corresponding parameter (See the man page of the corresponding tool). For example:

$> mount.xtreemfs --pkcs12-file-path=\

/etc/xos/xtreemfs/truststore/certs/client.p12 \

--pkcs12-passphrase=passphrase pbrpcs://localhost/test /mnt

or

$> xtfs_chstatus \

-c /etc/xos/xtreemfs/truststore/certs/client.p12 \

-cpass phasphrase \

-t /etc/xos/xtreemfs/truststore/certs/trusted.jks \

-tpass jks_passpharase test-osd online

- Set the corresponding passphrase parameter to "-" and you will be prompted for the passphrase. For example:

$> mount.xtreemfs --pkcs12-file-path=\

/etc/xos/xtreemfs/truststore/certs/client.p12 \

--pkcs12-passphrase=- pbrpcs://localhost/test /mnt

Press enter and you will be prompted for the passphrase:

No PKCS#12 certificate passphrase was given. Please enter it now:

3.2.6 Running XtreemFS on SSDs

SSDs are replacing spinning hard disk drives increasingly. This trend is also relevant for the area of distributed file systems. As XtreemFS uses an underlying local file system for all services, XtreemFS can be used with SSDs without any modifications.

To make use of the increased performance that an SSD can provide, it might be suitable to use multiple storage threads on the XtreemFS OSD. The number of storage threads can be adjusted by the storage_threads parameter in the OSD configuration file (see Sec. 3.2.7).

3.2.7 List of Configuration Options

All configuration parameters that may be used to define the behavior of the different services are listed in this section. Unless marked as optional, a parameter has to occur (exactly once) in a configuration file. Parameters marked as experimental belong to the DIR and MRC replication feature, which is currently under development. It is not recommended to mess about with these options if you want to use XtreemFS in production.

Aside from using the following predefined parameters to configure services, OSDs also allow to specify custom configuration parameters. Such parameters may have arbitrary names that start with the prefix "config.". They can be used to enable a fine-grained individual assignment of OSDs to new files and replicas. For further details, please refer to Section 7.3.3.

admin_password optional

| Services | DIR, MRC, OSD |

| Values | String |

| Default | |

| Description | Defines the admin password that must be sent to authorize requests like volume creation, deletion or shutdown. The same password is also used to access the HTTP status page of the service (user name is admin).

|

authentication_provider

| Services | MRC |

| Values | Java class name |

| Default | org.xtreemfs.common.auth.NullAuthProvider |

| Description | Defines the Authentication Provider to use to retrieve the user identity (user ID and group IDs). See Sec. 3.2.4 for details.

|

babudb.baseDir

| Services | DIR, MRC |

| Values | absolute file system path to a directory |

| Default | DIR: /var/lib/xtreemfs/dir/database |

| MRC: /var/lib/xtreemfs/mrc/database |

| Description | The directory in which the Directory Service or MRC will store their databases. This directory should never be on the same partition as any OSD data, if both services reside on the same machine. Otherwise, deadlocks may occur if the partition runs out of free disk space.

|

babudb.cfgFile optional

| Services | DIR, MRC |

| Values | a file name |

| Default | DIR: config.db |

| MRC: config.db |

| Description | Name for the database configuration file.

|

babudb.checkInterval optional

| Services | DIR, MRC |

| Values | a positive integer value |

| Default | DIR: 300 |

| MRC: 300 |

| Description | The number of seconds between two checks of the disk log size for automatic checkpointing. Set this value to 0 to disable automatic checkpointing.

|

babudb.compression optional

| Services | DIR, MRC |

| Values | true or false |

| Default | DIR: false |

| MRC: false |

| Description | Flag that determines whether database content shall be compressed or not.

|

babudb.debug.level optional

| Services | DIR, MRC |

| Values | 0, 1, 2, 3, 4, 5, 6, 7 |

| Default | DIR: 4 |

| MRC: 4 |

| Description | This is the debug level for BabuDB only. The debug level determines the amount and detail of information written to logfiles. Any debug level includes log messages from lower debug levels. The following log levels exist:

0 - fatal errors

1 - alert messages

2 - critical errors

3 - normal errors

4 - warnings

5 - notices

6 - info messages

7 - debug messages

|

babudb.logDir

| Services | DIR, MRC |

| Values | absolute file system path |

| Default | DIR: /var/lib/xtreemfs/dir/db-log |

| MRC: /var/lib/xtreemfs/mrc/db-log |

| Description | The directory the MRC uses to store database logs. This directory should never be on the same partition as any OSD data, if both services reside on the same machine. Otherwise, deadlocks may occur if the partition runs out of free disk space.

|

babudb.maxLogfileSize optional

| Services | DIR, MRC |

| Values | a positive integer value |

| Default | DIR: 16777216 |

| MRC: 16777216 |

| Description | If automatic checkpointing is enabled, a checkpoint is created when the disk logfile exceeds maxLogfileSize bytes. The value should be reasonable large to keep the checkpointing-rate low. However, it should not be too large as a large disk log increases the recovery time after a crash.

|

babudb.pseudoSyncWait optional

| Services | DIR, MRC |

| Values | a positive integer value |

| Default | DIR: 200 |

| MRC: 0 |

| Description | The BabuDB disk logger can batch multiple operations into a single write+fsync to increase the throughput. This does only work if there are operations executed in parallel by the worker threads. In turn, if you work on a single database it becomes less efficient. To circumvent this problem, BabuDB offers a pseudo-sync mode which is similar to the PostgreSQL write-ahead log (WAL). If pseduoSyncWait is set to a value larger then 0, this pseudo-sync mode is enabled. In this mode, insert operations are acknowledged as soon as they have been executed on the in-memory database index. The disk logger will execute a batch write of up to 500 operations followed by a single sync (see syncMode) every pseudoSyncWait ms. This mode is considerably faster than synchronous writes but you can lose data in case of a crash. In contrast to ASYNC mode the data loss is limited to the operations executed in the last pseudoSyncWait ms.

|

babudb.sync

| Services | DIR, MRC |

| Values | ASYNC, SYNC_WRITE_METADATA, SYNC_WRITE, |

| FDATASYNC or FSYNC |

| Default | DIR: FSYNC |

| MRC: ASYNC |

| Description | The sync mode influences how operations are committed to the disk log before the operation is acknowledged to the caller.

-ASYNC mode the writes to the disk log are buffered in memory by the operating system. This is the fastest mode but will lead to data loss in case of a crash, kernel panic or power failure.

-SYNC_WRITE_METADATA opens the file with O_SYNC, the system will not buffer any writes. The operation will be acknowledged when data has been safely written to disk. This mode is slow but offers maximum data safety. However, BabuDB cannot influence the disk drive caches, this depends on the OS and hard disk model.

-SYNC_WRITE similar to SYNC_WRITE_METADATA but opens file with O_DSYNC which means that only the data is commit to disk. This can lead to some data loss depending on the implementation of the underlying file system. Linux does not implement this mode.

-FDATASYNC is similar to SYNC_WRITE but opens the file in asynchronous mode and calls fdatasync() after writing the data to disk.

-FSYNC is similar to SYNC_WRITE_METADATA but opens the file in asynchronous mode and calls fsync() after writing the data to disk.

For best throughput use ASYNC, for maximum data safety use FSYNC.

|

babudb.worker.maxQueueLength optional

| Services | DIR, MRC |

| Values | a positive integer value |

| Default | DIR: 250 |

| MRC: 250 |

| Description | If set to a value larger than 0, this is the maximum number of requests which can be in a worker's queue. This value should be used if you have pseudo-synchronous mode enabled to ensure that your queues don't grow until you get an out of memory exception. Can be set to 0 if pseudo-sync mode is disabled.

|

babudb.worker.numThreads optional

| Services | DIR, MRC |

| Values | a positiv integer value |

| Default | DIR: 0 |

| MRC: 0 |

| Description | The number of worker threads to be used for database operations. As BabuDB does not use locking, each database is handled by only one worker thread. If there are more databases than worker threads, the databases are distributed onto the available threads. The number of threads should be set to a value smaller than the number of available cores to reduce overhead through context switches. You can also set the number of worker threads to 0. This will considerably reduce latency, but may also decrease throughput on a multi-core system with more than one database.

|

capability_secret

| Services | MRC, OSD |

| Values | String |

| Default | |

| Description | Defines a shared secret between the MRC and all OSDs. The secret is used by the MRC to sign capabilities, i.e. security tokens for data access at OSDs. In turn, an OSD uses the secret to verify that the capability has been issued by the MRC.

|

capability_timeout optional

| Services | MRC |

| Values | seconds |

| Default | 600 |

| Description | Defines the relative time span for which a capability is valid after having been issued.

|

checksums.enabled

| Services | OSD |

| Values | true, false |

| Default | false |

| Description | If set to true, the OSD will calculate and store checksums for newly created objects. Each time a checksummed object is read, the checksum will be verified.

|

checksums.algorithm

| Services | OSD |

| Values | Adler32, CRC32 |

| Default | Adler32 |

| Description | Must be specified if checksums.enabled is enabled. This property defines the algorithm used to create OSD checksums.

|

debug.level optional

| Services | DIR, MRC, OSD |

| Values | 0, 1, 2, 3, 4, 5, 6, 7 |

| Default | 6 |

| Description | The debug level determines the amount and detail of information written to logfiles. Any debug level includes log messages from lower debug levels. The following log levels exist:

0 - fatal errors

1 - alert messages

2 - critical errors

3 - normal errors

4 - warnings

5 - notices

6 - info messages

7 - debug messages

|

debug.categories optional

| Services | DIR, MRC, OSD |

| Values | all, lifecycle, net, auth, stage, proc, db, misc |

| Default | all |

| Description | Debug categories determine the domains for which log messages will be printed. By default, there are no domain restrictions, i.e. log messages form all domains will be included in the log. The following categories can be selected:

all - no restrictions on the category

lifecycle - service lifecycle-related messages, including startup and shutdown events

net - messages pertaining to network traffic and communication between services

auth - authentication and authorization-related messages

stage - messages pertaining to the flow of requests through the different stages of a service

proc - messages about the processing of requests

db - messages that are logged in connection with database accesses

misc - any other log messages that do not fit in one of the previous categories

Note that it is possible to specify multiple categories by means of a comma or space-separated list.

|

dir_service.host

| Services | MRC, OSD |

| Values | hostname or IP address |

| Default | localhost |

| Description | Specifies the hostname or IP address of the directory service (DIR) at which the MRC or OSD should register. The MRC also uses this Directory Service to find OSDs. If set to .autodiscover the service will use the automatic DIR discovery mechanism (see Sec. 3.2.3). (Note that the initial `.' is used to avoid ambiguities with hosts called "autodiscover".)

|

dir_service.port

| Services | MRC, OSD |

| Values | 1 .. 65535 |

| Default | 32638 |

| Description | Specifies the port on which the remote directory service is listening. Must be identical to the listen_port in your directory service configuration.

|

discover optional

| Services | DIR |

| Values | true, false |

| Default | true |

| Description | If set to true the DIR will received UDP broadcasts and advertise itself in response to XtreemFS components using the DIR automatic discovery mechanism. If set to false, the DIR will ignore all UDP traffic. For details see Sec. 3.2.3.

|

flease.dmax_ms optional

| Services | OSD |

| Values | milliseconds |

| Default | 1000 |

| Description | Maximum clock drift between any two clocks in the system. If the actual drift between two server clocks exceeds this value, read-write replication may lead to inconsistent replicas. Since servers automatically synchronize their clocks with the clock on the DIR, however, the default 1000ms should be enough in most cases.

|

flease.lease_timeout_ms optional

| Services | OSD, MRC |

| Values | milliseconds |

| Default | 14000 |

| Description | Duration of a lease in milliseconds. For read-write-replicated files, the lease timeout specifies the validity time span of a master lease. Shorter lease timeouts guarantee a shorter fail-over period in the event of a server crash, which however comes at the cost of an increased rate of lease negotiations for each open file. The lease timeout should be set to a value at least three times flease.message_to_ms.

|

flease.message_to_ms optional

| Services | OSD |

| Values | milliseconds |

| Default | 500 |

| Description | Time to wait for responses from other OSDs when negotiating leases for replicated files. This value should be larger than the maximum message round-trip time via TCP between any pair of OSDs.

|

flease.retries optional

| Services | OSD |

| Values | 1..1000 |

| Default | 3 |

| Description | Number of times to retry acquiring a lease for a replicated file before an IO error is sent to the client.

|

geographic_coordinates optional

| Services | DIR, MRC, OSD |

| Values | String |

| Default | |

| Description | Specifies the geographic coordinates which are registered with the directory service. Used e.g. by the web console.

|

hostname optional

| Services | MRC, OSD |

| Values | String |

| Default | |

| Description | If specified and multihoming.enabled is false, it defines the host name that is used to register the service at the directory service. If neither hostname nor listen.address are specified, the service itself will search for an externally reachable network interface and advertise its address. To use multiple interfaces see .

|

health_script optional

| Services | OSD |

| Values | String |

| Default | |

| Description | If specified, the OSD will use this script to determine the health status of the storage devices which stores the object_dir. See section 3.3.2 for more information.

|

http_port

| Services | DIR, MRC, OSD |

| Values | 1 .. 65535 |

| Default | 30636 (MRC), 30638 (DIR), 30640 (OSD) |

| Description | Specifies the listen port for the HTTP service that returns the status page.

|

ignore_capabilities optional

| Services | OSD |

| Values | true, false |

| Default | false |

| Description | When set to true, capability checks on the OSD are disabled. This property should only be set to true for debugging purposes, as it effectively overrides any security mechanisms on the system.

|

listen.address optional

| Services | DIR, MRC, OSD |

| Values | IP address |

| Default | |

| Description | If specified, it defines the interface to listen on. If not specified, the service will listen on all interfaces (any).

|

listen.port

| Services | DIR, MRC, OSD |

| Values | 1 .. 65535 |

| Default | DIR: 32638, |

| MRC: 32636, |

| OSD: 32640 |

| Description | The port to listen on for incoming connections (TCP). The OSD uses the specified port for both TCP and UDP. Please make sure to configure your firewall to allow incoming TCP traffic (plus UDP traffic, in case of an OSD) on the specified port.

|

local_clock_renewal

| Services | MRC, OSD |

| Values | milliseconds |

| Default | 0 |

| Description | Reading the system clock is a slow operation on some systems (e.g. Linux) as it is a system call. To increase performance, XtreemFS services use a local variable which is only updated every local_clock_renewal milliseconds. As of XtreemFS 1.4, this optimization is disabled.

|

max_client_queue

| Services | OSD |

| Values | 1 .. N |

| Default | 100 |

| Description | To avoid overloading the server by a single client, the maximum number of pending requests per client is limited. Usually, this limit should not be exceeded unless you use Read/Write file replication in connection with asynchronous writes and a large number of allowed pending writes on the client side (see section 4.3.4).

|

monitoring.enabled

| Services | DIR |

| Values | true, false |

| Default | false |

| Description | Enables the built-in monitoring tool in the directory service. If enabled, the DIR will send alerts via emails if services are crashed (i.e. do not send heartbeat messages). No alerts will be sent for services which signed-off at the DIR. To enable monitoring you also need to configure monitoring.email.receiver, monitoring.email.program. In addition, you may want to change the values for monitoring.email.sender, monitoring.max_warnings, monitoring.service_timeout_s.

|

monitoring.email.programm

| Services | DIR |

| Values | path |

| Default | /usr/sbin/sendmail |

| Description | Location of the sendmail binary to be used for sending alert mails. See monitoring parameters.

|

monitoring.email.receiver

| Services | DIR |

| Values | email address |

| Default | - |

| Description | Email address of recipient of alert emails. See monitoring parameters.

|

monitoring.email.sender

| Services | DIR |

| Values | email address |

| Default | "XtreemFS DIR service <dir@localhost>" |

| Description | Email address and sender name to use for sending alert mails. See monitoring parameters.

|

monitoring.max_warnings

| Services | DIR |

| Values | 0..N |

| Default | 1 |

| Description | Number of alert mails to send for a single service which has crashed/disconnected. Each alert mail contains a summary of all crashed/disconnected services. See monitoring parameters.

|

monitoring.service_timeout_s

| Services | DIR |

| Values | 0..N seconds |

| Default | 300 |

| Description | Time to wait for a heartbeat message before sending an alert email. See monitoring parameters.

|

multihoming.enabled optional

| Services | MRC, OSD |

| Values | true, false |

| Default | false |

| Description | If set to true, the service will use every interface installed in the system and propagate their addresses to the DIR. If hostname is set, its address will be used as the default endpoint that should be reachable from any network. Otherwise the service tries to discover the hostname itself. |

| If this parameter is true, then listen.address must not be set.

|

multihoming.renewal_signal optional

| Services | MRC, OSD |

| Values | true, false |

| Default | false |

| Description | If set to true, the service will try to register a signal handler for USR2 upon which it refreshes its address mapping. Since some Java Virtual Machines (JVM) are using USR2 internally by default, this parameter should be used together with the JVM flag -XX:+UseAltSigs. |

| This functionality is helpful, if dynamic networks are added or removed to the system and the XtreemFS server should update its list of reachable networks.

|

no_atime

| Services | MRC |

| Values | true, false |

| Default | true |

| Description | The POSIX standard defines that the atime (timestamp of last file access) is updated each time a file is opened, even for read. This means that there is a write to the database and hard disk on the MRC each time a file is read. To reduce the load, many file systems (e.g. ext3) including XtreemFS can be configured to skip those updates for performance. It is strongly suggested to disable atime updates by setting this parameter to true.

|

object_dir

| Services | OSD |

| Values | absolute file system path to a directory |

| Default | /var/lib/xtreemfs/osd/ |

| Description | The directory in which the OSD stores the objects. This directory should never be on the same partition as any DIR or MRC database, if both services reside on the same machine. Otherwise, deadlocks may occur if the partition runs out of free disk space!

|

osd_check_interval

| Services | MRC |

| Values | seconds |

| Default | 300 |

| Description | The MRC regularly asks the directory service for suitable OSDs to store files on (see OSD Selection Policy, Sec. 7.3). This parameter defines the interval between two updates of the list of suitable OSDs.

|

policy_dir optional

| Services | MRC, OSD, DIR |

| Values | absolute file system path to a directory |

| Default | |

| Description | Directory containing user-defined policies and modules. When starting a service, the policy directory will be searched for custom policies. For further details on pluggable policies, see chapter 7.

|

remote_time_sync

| Services | MRC, OSD |

| Values | milliseconds |

| Default | 30,000 |

| Description | MRCs and OSDs all synchronize their clocks with the directory service to ensure a loose clock synchronization of all services. This is required for leases to work correctly. This parameter defines the interval in milliseconds between time updates from the directory service.

|

renew_to_caps optional

| Services | MRC |

| Values | true, false |

| Default | false |

| Description | If set to true, the MRC allows capabilities to be renewed after they timed out. This parameter should only be used for debugging purposes, as it effectively overrides the revocation of access rights on a file.

|

report_free_space

| Services | OSD |

| Values | true, false |

| Default | true |

| Description | If set to true, the OSD will report its free space to the directory service. Otherwise, it will report zero, which will cause the OSD not to be used by the OSD Selection Policies (see Sec. 7.3).

|

socket.send_buffer_size optional

| Services | OSD |

| Values | size in bytes |

| Default | -1 |

| Description | The send buffer size in bytes for sockets. -1 indicates that the default value (typically 128k) is used.

|

snmp.enabled optional

| Services | DIR, MRC, OSD |

| Values | String, either true or false |

| Default | false |

| Description | Must be set if the SNMP agent of XtreemFS should be used for monitoring. See section 3.3.6.

|

snmp.address optional

| Services | DIR, MRC, OSD |

| Values | IP address or hostname |

| Default | localhost |

| Description | If specified, it defines the interface for the SNMP agent to listen on. If not specified, the SNMP agent will listen on all interfaces (any). See section 3.3.6.

|

snmp.port optional

| Services | DIR, MRC, OSD |

| Values | 1 .. 65535 |

| Default | 34636 (MRC), 34638 (DIR), 34640 (OSD) |

| Description | Specifies the listen port for the SNMP agent. See section 3.3.6.

|

snmp.aclfile optional

| Services | DIR, MRC, OSD |

| Values | path to file |

| Default | /etc/xtreemfs/snmp.acl |

| Description | Specifies the path to the ACL file for SNMP access. See section 3.3.6.

|

ssl.enabled

| Services | DIR, MRC, OSD |

| Values | true, false |

| Default | false |

| Description | If set to true, the service will use SSL to authenticate and encrypt connections. The service will not accept non-SSL connections if ssl.enabled is set to true.

|

ssl.grid_ssl

| Services | DIR, MRC, OSD |

| Values | true, false |

| Default | false |

| Description | In this mode the services and client will only use SSL for mutual authentication with X.509 certificates (SSL handshake). After successful authentication the communication is via plain TCP. This means that there is no encryption and signing of records! This mode is comparable to HTTP connections with Digest authentication. It should be used when certificate based authentication is required but performance is more important than security, which is usually true in GRID installations. If this mode is enabled, all client tools must be used with the pbrpcg:// scheme prefix.

|

ssl.service_creds

| Services | DIR, MRC, OSD |

| Values | path to file |

| Default | DIR: /etc/xos/xtreemfs/truststore/certs/ds.p12, |

| MRC: /etc/xos/xtreemfs/truststore/certs/mrc.p12, |

| OSD: /etc/xos/xtreemfs/truststore/certs/osd.p12 |

| Description | Must be specified if ssl.enabled is enabled. Specifies the file containing the service credentials (X.509 certificate and private key). PKCS#12 and JKS format can be used, set ssl.service_creds.container accordingly. This file is used during the SSL handshake to authenticate the service.

|

ssl.service_creds.container

| Services | DIR, MRC, OSD |

| Values | pkcs12 or JKS |

| Default | pkcs12 |

| Description | Must be specified if ssl.enabled is enabled. Specifies the file format of the ssl.service_creds file.

|

ssl.service_creds.pw

| Services | DIR, MRC, OSD |

| Values | String |

| Default | |

| Description | Must be specified if ssl.enabled is enabled. Specifies the password which protects the credentials file ssl.service_creds.

|

ssl.trusted_certs

| Services | DIR, MRC, OSD |

| Values | path to file |

| Default | /etc/xos/xtreemfs/truststore/certs/xosrootca.jks |

| Description | Must be specified if ssl.enabled is enabled. Specifies the file containing the trusted root certificates (e.g. CA certificates) used to authenticate clients.

|

ssl.trusted_certs.container

| Services | DIR, MRC, OSD |

| Values | pkcs12 or JKS |

| Default | JKS |

| Description | Must be specified if ssl.enabled is enabled. Specifies the file format of the ssl.trusted_certs file.

|

ssl.trust_manager optional

| Services | DIR, MRC, OSD |

| Values | Java class name |

| Default | |

| Description | Sets a custom trust manager class for SSL connections. The trust manager is responsible for checking certificates when SSL connections are established.

|

ssl.trusted_certs.pw

| Services | DIR, MRC, OSD |

| Values | String |

| Default | |

| Description | Must be specified if ssl.enabled is enabled. Specifies the password which protects the trusted certificates file ssl.trusted_certs.

|

startup.wait_for_dir

| Services | MRC, OSD |

| Values | 0..N seconds |

| Default | 30 |

| Description | Time to wait for the DIR to become available during start up of the MRC and OSD. If the DIR does not respond within this time the MRC or OSD will abort startup.

|

storage_layout optional

| Services | OSD |

| Values | HashStorageLayout |

| Default | HashStorageLayout |

| Description | Adjusts the internally used storage layout on the OSD. The storage layout determines how an OSD stores its files and objects. Currently, only HashStorageLayout is supported.

|

storage_threads optional

| Services | OSD |

| Values | 1..N |

| Default | 1 |

| Description | Set the number of threads that are used to access the underlying file system. For spinning disks, the default value is recommended. For SSDs a higher number might be sufficient.

|

uuid

| Services | MRC, OSD |

| Values | String, but limited to alphanumeric characters, - and . |

| Default | |

| Description | Must be set to a unique identifier, preferably a UUID according to RFC 4122. UUIDs can be generated with uuidgen. Example: eacb6bab-f444-4ebf-a06a-3f72d7465e40.

|

vivaldi.max_clients

| Services | DIR |

| Values | 0..N |

| Default | 32 |

| Description | Set the maximum number of client coordinates to be remembered. 0 deactivates the client visualization (but clients might still try).

|

vivaldi.client_timeout

| Services | DIR |

| Values | 1..N milliseconds |

| Default | 600000 |

| Description | Set a timeout (in ms) after which a client's data set will be removed. This value should be set with respect to the recalculation interval of the Vivaldi algorithm set on the client.

|

vivaldi.recalculation_interval_ms

| Services | OSD |

| Values | 1..N milliseconds |

| Default | 300000 |

| Description | The time between two recalculations of the Vivaldi coordinates is randomly chosen as vivaldi.recalculation_interval_ms +/- vivaldi.recalculation_epsilon_ms.

|

vivaldi.recalculation_epsilon_ms

| Services | OSD |

| Values | 1..N milliseconds |

| Default | 30000 |

| Description | See vivaldi.recalculation_interval_ms.

|

vivaldi.iterations_before_updating

| Services | OSD |

| Values | 1..N |

| Default | 12 |

| Description | The number of Vivaldi iterations before a new list of peers (OSDs) will be fetched from the DIR.

|

vivaldi.max_retries_for_a_request

| Services | OSD |

| Values | 1..N |

| Default | 2 |

| Description | Number of retries before accepting a 'suspiciously high' RTT.

|

vivaldi.max_request_timeout_ms

| Services | OSD |

| Values | 1..N milliseconds |

| Default | 10000 |

| Description | Maximum number of milliseconds an OSD waits for a response before discarding its corresponding request (expiration times smaller than vivaldi.timer_interval_ms are not granted).

|

vivaldi.timer_interval_ms

| Services | OSD |

| Values | 1..N milliseconds |

| Default | 600000 |

| Description | Period of time between two Vivaldi probes.

|

3.3 Execution and Monitoring

This section describes how to execute and monitor XtreemFS services.

3.3.1 Starting and Stopping the XtreemFS services

If you installed a pre-packaged release you can start, stop and restart the services with the init.d scripts:

$> /etc/init.d/xtreemfs-dir start

$> /etc/init.d/xtreemfs-mrc start

$> /etc/init.d/xtreemfs-osd start

or

$> /etc/init.d/xtreemfs-dir stop

$> /etc/init.d/xtreemfs-mrc stop

$> /etc/init.d/xtreemfs-osd stop

To run init.d scripts, root permissions are required. Note that MRC and OSD will wait for the Directory Service to become available before they start up. Once a Directory Service as well as at least one OSD and MRC are running, XtreemFS is operational.

3.3.2 Running multiple OSDs per Machine

Running more than one OSD service per host might be useful in various situations. Use cases for this might be machines with more than one disk as an alternative to a local RAID or testing purposes. We offer an extended init.d script, named xtreemfs-osd-farm, to start or stop a set of OSDs on one host by a single script.

The xtreemfs-osd-farm script can be found in the /usr/share/xtreemfs directory, if XtreemFS is installed by the provided packages, or in the contrib directory of the XtreemFS GIT repository.

Using the xtreemfs-osd-farm script demands two steps. First, a list names for all of the used OSDs hat to be set to the OSD_INSTANCES variable in the script. The list elements have to be separated by spaces. In the second step, a configuration file with the name <osdname>.config.properties has to be created in /etc/xos/xtreemfs for all of the defined OSD names, whereas <osdname> has to be replaced by the particular OSD name. After these steps, the init.d script can be executed with the usual arguments start, stop, status, restart, and try-restart. A single OSD can be controlled by xtreemfs-osd-farm <osdname> <argument>.

3.3.3 Monitoring OSD's storage devices

The health status of OSDs storage devices (e.g. the SMART health test of the

used disks) can be used to determine if an OSD will fail soon or is actually

failed. If the health status is available, the default OSD selection policy

(id 1000) can use it to exclude OSDs with a critical status (WARNING or

FAILED). To test the health status, the OSD executes a user-defined script, that

can be configured in the OSD configuration file with the health_check

parameter. If you want to write such a script you must consider the following

things:

- The script will be executed with the object_dir as the first

(and only) parameter

- The script must exit with one of the following return values:

- 0 - PASSED: Storage device is in an uncritical state. The OSD

is not filtered out when the default OSD selection policy is used.

- 1 - WARNING: Storage could be in an critical state soon. The OSD

is filtered out, depending on the osd_health_status attribute, if the

default OSD selection policy is used.

- 2 - FAILED: Storage device is in an critical state. The OSD is

filtered out when the default OSD selection policy is used.

- 3 - Not Available: The health status is not available. Also used

when an error occurs or an invalid value is returned from the script.

- Additionally, the output of the script (stdout) is send to the DIR and is

shown in the web interface

An example script for Linux systems is provided in

/usr/share/xtreemfs/osd_health_check.sh. This script uses the SMART

health test result of the devices which stores the object_dir. The

Script supports software RAID configurations (/dev/md*) and single

devices(/dev/sd* or /dev/hd*). In order to use this script,

smartmontools must be installed and the command smartctl must

be executable with sudo privileges without password.

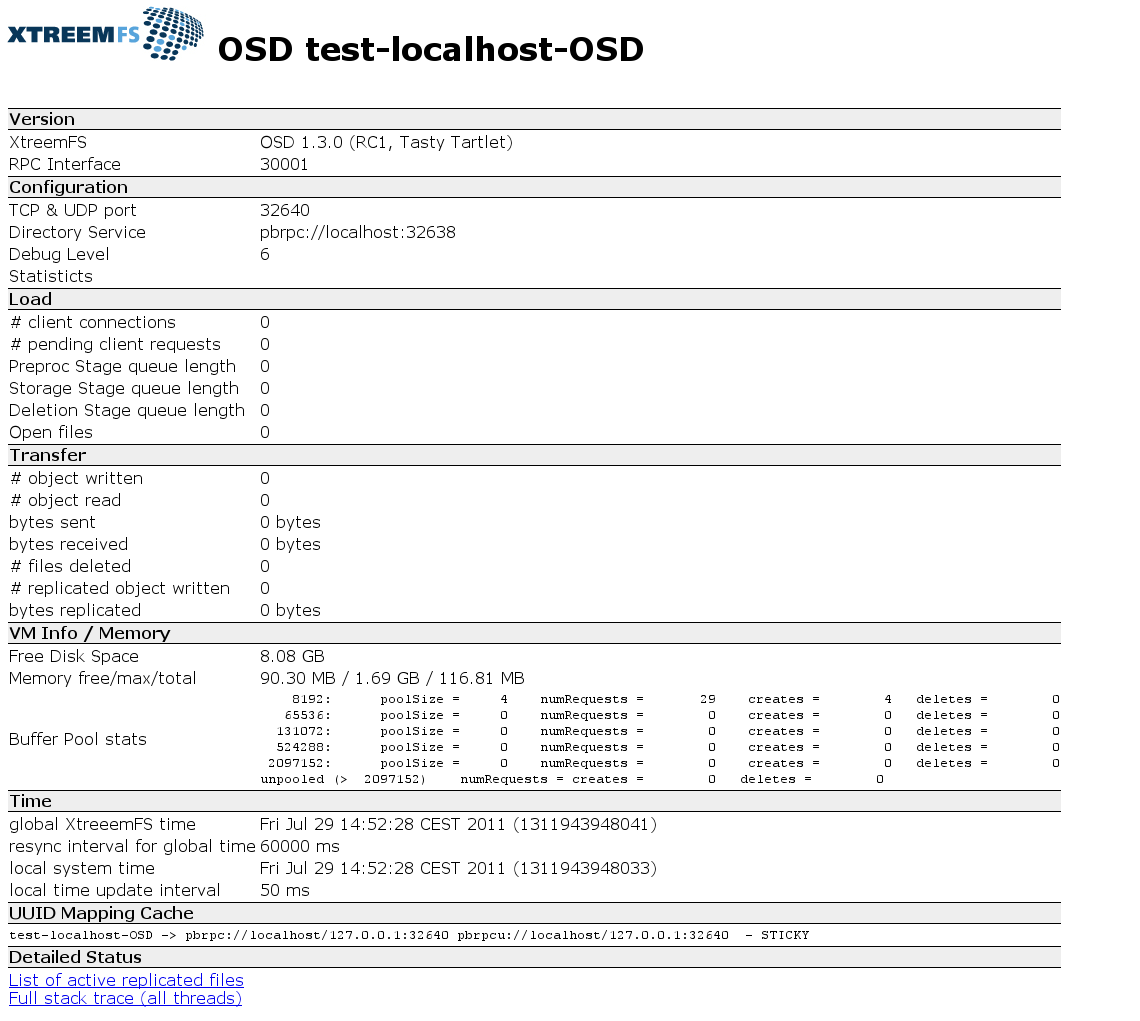

3.3.4 Web-based Status Page

Figure 3.1: OSD status web page

Each XtreemFS service can generate an HTML status page, which displays runtime information about the service (Fig. 3.1). The HTTP server that generates the status page runs on the port defined by the configuration property http_port; default values are 30636 for MRCs, 30638 for Directory Services, and 30640 for OSDs.

The status page of an MRC can e.g. be shown by opening

http://my-mrc-host.com:30636/

with a common web browser. If you set an admin password in the service's configuration, you will be asked for authentication when accessing the status page. Use admin as user name.

3.3.5 DIR Service Monitoring

The directory service has a built-in notification system that can send alert emails if a service fails to send heartbeat messages for some time. The monitoring can be enabled in the DIR configuration by setting monitoring = true.

3.3.6 Monitoring Services with SNMP

All XtreemFS services can act as SNMP agent which gives the possibility to monitor status information through the SNMP protocol. To enable SNMP support for a specific service you have to set the following parameter in its configuration file:

snmp.enabled = true

snmp.address = localhost

snmp.port = 34638

snmp.aclfile = /etc/xos/xtreemfs/snmp.acl

If snmp.enabled is not set to true the service will not start a SNMP agent and all other SNMP related configuration parameter will be ignored. snmp.port defines the port on which the SNMP agent will listen and snmp.address the interface it is bound to. Since XtreemFS uses SNMPv2 which is known to be not secure it is recommended to use a dedicated monitoring network and a dedicated interface. The optional snmp.aclfile paramater is a path to JDMK1 (Java Dynamic Management Kit - the library used for providing SNMP functionality in XtreemFS) ACL file. In this file you can define who (which hosts) can use what kind of SNMP feature. Because the XtreemFS SNMP agent only provides the functionality to read values per SNMP the following example acl file is sufficient:

acl = {

{

communities = public

access = read-only

managers = localhost

}

}

communites is the SNMP community string your managers have to use and managers is a comma-separated list of hostnames, ip addresses or subnets in prefix notation (i.e. "xtreemfs-host", "192.168.0.54" or "192.168.0.3/24").

Note: If you comment out the snmp.aclfile line every host on your network can access all information through the SNMP agent.

If you have configured SNMP correctly an easy way to check if the SNMP agent is running is by using the snmpget commandline tool as follows

$> snmpget -v2c -cpublic localhost:34638 1.3.6.1.4.1.38350.1.1.0

where "public" is the community string and the SNMP agent listens at port 9001 on localhost. "1.3.6.1.4.1.38350.1.1.0" is the OID (object identifier) which is associated with the amount of memory the JVM of this service is currently using. A list of all queryable OIDs and which information the represent can be found in the following tables.

| General: These information belong to all services |

| OID | Textual representation | Datatype | Description |

| 1.3.6.1.4.1.38350.1.1.0 | jvmUsedMemory | Long | The amount of memory that is used by the JVM this service is running into. |

| 1.3.6.1.4.1.38350.1.2.0 | jvmMaxMemory | Long | The maximum amount of memory the JVM can use. |

| 1.3.6.1.4.1.38350.1.3.0 | jvmFreeMemory | Long | The amount of free memory the JVM can still use. |

| 1.3.6.1.4.1.38350.1.4.0 | rpcInterface | Integer32 | The interface number associated with Google Protocolbuffers RPC infrastructure. |

| 1.3.6.1.4.1.38350.1.5.0 | databaseVersion | String | The version of the BabuDB Database which the service is using. |

| 1.3.6.1.4.1.38350.1.6.0 | tcpPort | Integer32 | The TCP port on which the service is listening for incomming client connections. |

| 1.3.6.1.4.1.38350.1.7.0 | debugLevel | Integer | The current Debug Level the service has. |

| 1.3.6.1.4.1.38350.1.8.0 | numClientConnections | Integer32 | Number of active client connections. |

| 1.3.6.1.4.1.38350.1.9.0 | numPendingRequests | Long | The number of currently pending requests. |

| 1.3.6.1.4.1.38350.1.10.0 | currentTime | Long | The global time in this XtreemFS installation. |

| 1.3.6.1.4.1.38350.1.11.0 | isRunning | String | Returns whether or not the service is running. (ONLINE, OFFLINE) |

| 1.3.6.1.4.1.38350.1.12.0 | serviceType | String | Returns which kind of service this is (DIR, MRC, OSD). |